Large language models (LLMs) like ChatGPT and GitHub Copilot are rapidly…

In this post I will discuss some important points of TensorFlow; namely what is it, how it works, and how easily it can be used in production environments with Keras and eager processing.

Enterprises that have and want to utilize big data need a machine learning solution. One which promotes ease of expression for multiple machine learning ideas, algorithms, and scalability to run experiments and data analytics quickly. Portability to multiple platforms, data reproducibility, and production readiness creates a system with a cutting edge to solve complex business problems with deeper and clearer insights. Facebook, Microsoft, Yahoo, and Google are some of the many companies who have already leveraged TensorFlow’s learning capabilities giving credence to why it’s one the most popular machine learning and deep learning libraries available.

TensorFlow Key Terms

TensorFlow is commonly used for: Deep Learning, Classification & Predictions, Image Recognition, and Transfer Learning.

Deep learning is a machine learning technique that teaches computers by providing examples. It is a key technology behind driverless cars, by enabling vehicles to recognize stop signs, pedestrians, lampposts, and other obstacles. It also enables voice control in smart devices devices like phones, tablets, and TVs.

Classification models are designed to draw conclusions from previously seen data. Based on the inputs and the previously seen data, the model will try to predict the outcomes and assign a value to each outcome. The values are seen as labels that are applied to the input data, and thus now have a prediction. Examples include email classification filters predicting if an incoming email is “dangerous” or “safe,” or predicting if a transaction is fraudulent based on previous spending habits.

Image recognition “is similar to deep learning but is specifically focused on the ability of models to identify objects, places, people, writing, and actions in images. Image recognition is used to perform a large number of machine-based visual tasks, such as labeling the content of images with meta-tags, performing image content search, and guiding autonomous robots, self-driving cars, and accident avoidance systems [1].”

Transfer learning will be covered in more detail below.

What is TensorFlow?

TensorFlow is an open source library for numerical computation and large-scale machine learning. Created by the Google Brain team in November 2015, TensorFlow bundles together many machine learning and deep learning models and algorithms. It uses Python, with additional languages currently in development, to provide a front-end API for building applications framework and executing them in high-performance C++.

TensorFlow applications can be run on most any platform: local machines, cloud clusters, iOS and Android devices, CPUs, or GPUs. As Google created TensorFlow, Google Cloud Platform is highly optimized to run TensorFlow using Tensor Processing Units for further acceleration. The resulting trained models can be deployed on most any device.

How does TensorFlow work?

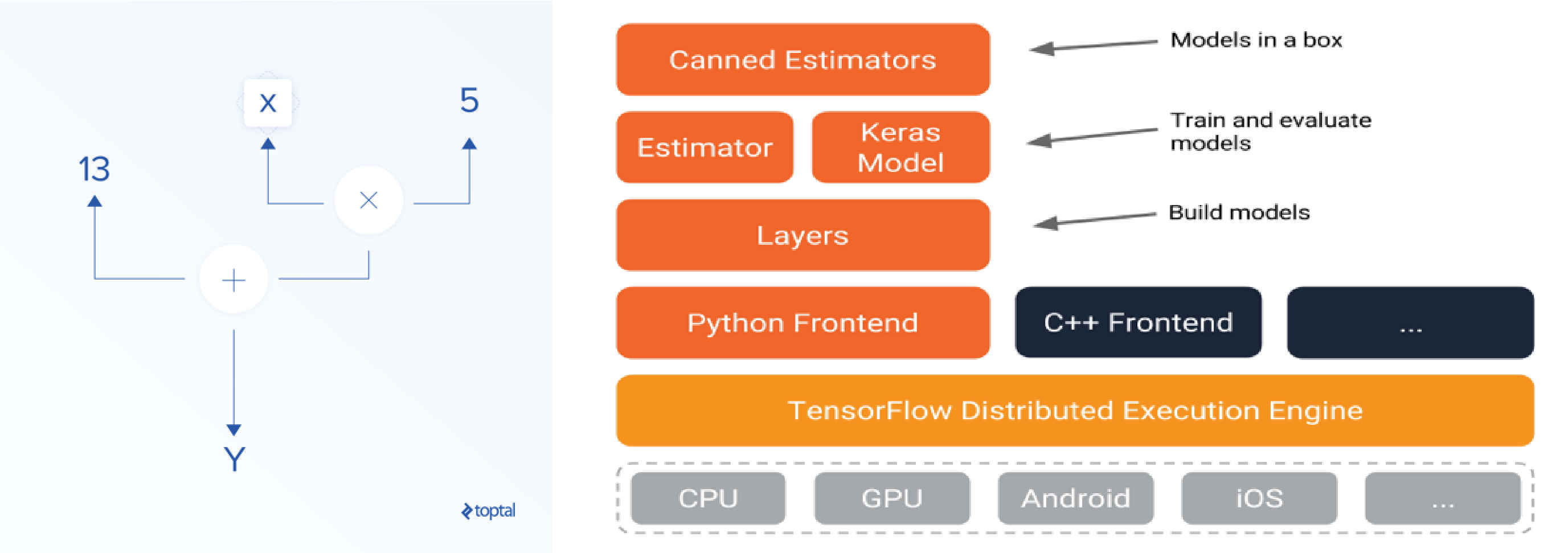

TensorFlow allows developers to create dataflow graphs utilizing DAGs (Directed Acyclic Graphs). In turn, dataflow graphs describe how data moves through a graph or a series of processing nodes. Each node in the graph represents a mathematical operation, and each edge between nodes is a tensor; a Google-created, highly optimized, multidimensional data array (see figure 1).

Nodes and tensors in TensorFlow are Python objects, and TensorFlow applications are themselves Python applications. The nodes do not execute using Python; they leverage transformation libraries written as high-performance C++ binaries. Python just directs data between the objects and provides high-level abstractions to link them together.

credits:KDnuggets (figure 1: left) credits:Google.com (figure 2: right)

In figure 2, the distributed execution engine abstracts the supported devices and provides access to the C++ high performance core. Above the execution engine sit the frontends: current languages include Python and C++. The Layers API provides a simpler interface to easily construct common deep learning models. Beyond that sit higher-level APIs, including Keras and the Estimator API, which makes training and evaluating distributed models easier. Topping everything are the pre-trained models available by the TensorFlow community. However, there are some downsides to coding with only TensorFlow. If you are new to machine learning and try to use TensorFlow, the learning curve is steep, and the library is quite verbos. This gives you great amounts of control, but this is not ideal for quick data discovery and debugging. The technologies discussed next remedy the difficulties of using TensorFlow.

Utilizing Keras & Eager Processing

What is Keras / Eager

Keras is a high-level API for building and training deep learning models. Specifically designed for very easy use and as an introduction to deep learning, it’s used for fast prototyping, advanced research, and production. But most importantly, for the purposes of this discussion, is its official inclusion in TensorFlow and its ability to enable eager processing.

In TensorFlow the developer must first create the full set of graph operations. Only then can the operations be compiled with a TensorFlow session object and fed data. By enabling the eager execution mode in the Keras API, you are now able to define operations, immediately run data through them, observe the output, and set standard Python debug breakpoints within your code. This allows you to step into your deep learning training loops to examine the objects in your models as you would in regular Python. What once took 30 lines of code now only takes 11.

Eager execution allows you to treat TensorFlow as Pythonic. This means you can create tensors, operations, and other TensorFlow objects by typing the command into Python, and execute them straight away without the need to set up the usual session infrastructure. As previously mentioned, this is useful for debugging, but it also allows for dynamic adjustments of training in progress TensorFlow models. In short, always develop and debug eagerly, as it will make TensorFlow feel like regular Python.

Why we want to use them

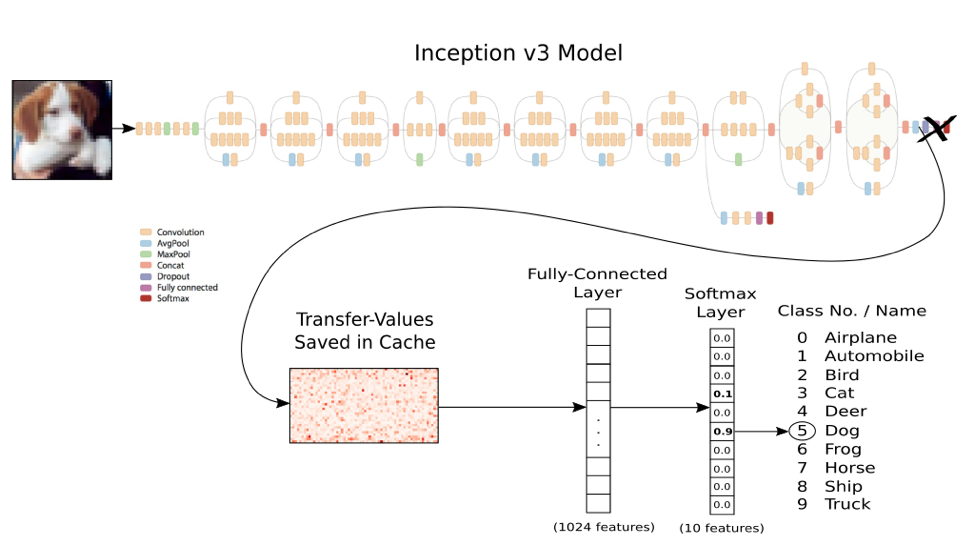

The pull for enterprise-level development is leveraging the vast library of pre-trained TensorFlow models by implementing transfer learning. Transfer learning utilizes pre-trained models by allowing you to use the general abstractions of the penultimate layer of a model and feed that data to your smaller, use-case-specific model. Many of the basic abstractions and classifications can be shared from model to model, reducing the amount of time, data, and computational resources necessary to create the final product. This allows for more agile development and quicker insights from your data.

credits:Hvass-Labs/TensorFlow-Tutorials (Transfer Learning visualization)

With the ability to easily create your own machine learning model, the application possibilities are endless. How about a “Fitbit for cows?” No problem. Or, determining that eye-wateringly high insurance quote because they just know you’re a good investment? They have that too.

How to get started using TensorFlow with Keras

If you are new to TensorFlow and just want to quickly get in on the action, or found this blog intriguing and want to learn more, this will take you to a GitHub repository. There you’ll find links to different Jupyter notebooks hosted by Colaboratory. Nothing needs to be downloaded, and everything is provided. The different notebooks offer different insights and high level demos showcasing just how quick and powerful TensorFlow, run eagerly with Keras, can be.

Some similar technologies to explore:

- MLlib

- Microsoft Azure Machine Learning

- Caffe

- Torch

- Neon

- Spark

References

[1] Rouse, M. (2018). image recognition. [online] WhatIs.com. Available at:

https://searchenterpriseai.techtarget.com/definition/image-recognition [Accessed 2 Nov. 2018].

This Post Has 0 Comments